QWEN 3 Coder: CHINA'S OPEN-SOURCE AI BOMBSHELL SHAKING SILICON VALLEY

Following the success of Qwen3, Alibaba has released Qwen3 Coder, a specialized large language model focused on programming and software development. Built on the same groundbreaking Mixture of Experts (MoE) architecture, this new model is open-source under the Apache 2.0 license a move that’s sending shockwaves through the AI world.

With a staggering 480 billion total parameters (35 billion activated at a time), Qwen3 Coder rivals and even outperforms, top Western models like Claude 4, Gemini Pro 2.5, and GPT in certain benchmarks, while remaining freely accessible to the global developer community.

That said,

"People don’t actually use large language models just for benchmarks… do they?"

Let’s be honest benchmark scores should always be taken with a grain of salt. Not just in AI, but across industries. If you’ve been around long enough, you might remember when DxOMark was considered the gold standard for smartphone camera quality — back when the first Google Pixel phones came out. And eventually? It faded into irrelevance. People quickly realized that real-world performance often tells a very different story.

So no, I’m not dismissing AI benchmarks entirely. They have their place. But it’s worth noting that many companies optimize their models specifically to score well, rather than focusing on practical use. That’s something we all need to be aware of when evaluating any “state-of-the-art” claim.

TL;DR: Based on my personal experience and limited hands-on time with the model, claiming it surpasses or on par with Anthropic’s state-of-the-art Claude 4 might be a bit of an overstatement. Still, the sheer scale of Qwen3 Coder and the fact that it’s fully open-source make it an impressive and important release.

Qwen3 Coder VS THE WEST: A SERIOUS CONTENDER

Recent evaluations show that Qwen3 Coder performs competitively in code generation, debugging, and multi-language software tasks. Despite Western companies closely guarding their models, Alibaba has gone in the opposite direction offering full weights, training details, and a growing suite of smaller, quantized variants for easier deployment.

What makes this even more impressive is that only 35 billion parameters are active at any time, thanks to MoE, which gives Qwen3 Coder the efficiency of smaller models with the power of a much larger one.

WHAT IS Mixture of Experts (MoE), AND WHY DOES IT MATTER?

Qwen3 Coder leverages MoE to deliver massive performance without requiring all model components to be active simultaneously. This architectural choice brings big advantages:

- Selective Activation: Only relevant "experts" are used per input, reducing computational cost.

- Efficiency at Scale: Offers powerful performance without needing trillion-parameter brute force.

- Scalability: Models can scale up without linearly increasing resource demands.

- Deployability: Enables creation of smaller, optimized versions for consumer hardware.

With Qwen3 Coder, Alibaba is proving that smarter architecture beats sheer size.

AN ACTUAL OPEN-SOURCE RELEASE NOT JUST A MARKETING BUZZWORD

Alibaba isn’t just paying lip service to open-source. With Qwen3 Coder, they’ve made the entire package available:

- Full model weights available for download

- Developer-friendly documentation and command-line tools

This stands in stark contrast to Western counterparts like GPT-4 and Claude 4, which remain locked behind APIs and usage restrictions.

THE CENSORSHIP QUESTION: IS IT REALLY “OPEN”?

Despite its technical openness, Qwen3 Coder like other Chinese-developed models such as DeepSeek-V3 (DeepSeek R1) may still face hidden constraints:

- Previous Chinese models have avoided or refused to answer questions on politically sensitive topics such as Taiwan, Tiananmen Square, and Uyghur rights.

- Qwen3 Coder, while primarily a code-focused model, can still respond to general questions — and in doing so, it appears to inherit some of these silent filters, quietly ignoring or deflecting restricted topics.

- While the code is open, the actual behaviour may reflect state-controlled messaging limits.

So while technically accessible, these models may be “open” only within politically acceptable boundaries a key distinction for researchers seeking truly uncensored tools.

INSTALLING/TRYING Qwen3 Coder: WHAT TO EXPECT

The full Qwen3-Code-480B model is extremely large, requiring at least 227GB of storage and high-end enterprise GPUs — well beyond what typical consumer hardware can handle.

For easier access, you can try Qwen3 Coder through the official online demo at chat.qwen.ai or via API. These services are hosted in China, so it's worth being mindful of potential data privacy considerations.

Even on Hugging Face, you can try it here.

Alternatively, the most popular one right now is via OpenRouter.ai which offers free API access to the Qwen3-Coder-480B-A35B-Instruct model, provided by a vendor named Chutes, which appears to be based in US but it might be closely tied to China. While this is a convenient way to experiment with the model, there’s an important trade-off to consider:

If you use the free API via OpenRouter, your interactions may be logged and used for training purposes. This means your privacy is potentially compromised — a trade-off users should be aware of before integrating the API into private or sensitive projects.

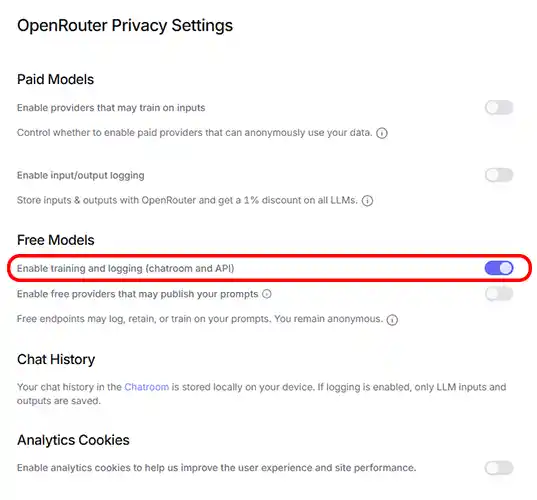

In my testing, disabling the “Enable training and logging (chatroom and API)” option in OpenRouter’s privacy settings caused API requests to fail entirely — suggesting that logging must be enabled for the free access to work.

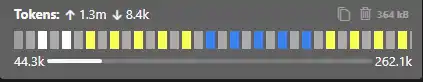

The free tier comes with a reasonable usage limit, which proved sufficient for basic experimentation. Below is a screenshot from the Cline dashboard, showing how the free API token appeared before hitting the daily usage cap — at which point access was temporarily paused.

If you're serious about building a project with Qwen3-Coder-480B-A35B-Instruct, you can opt for the paid API via OpenRouter. Multiple providers offer access to this model, and you can explore them at openrouter.ai/qwen/qwen3-coder. According to OpenRouter, paid API usage is not used for training, but this may depend on your privacy settings and the specific provider you choose. If you're cautious, it can still be a suitable option for production or privacy-sensitive applications. (This is not a promotion.)

However, keep in mind that many of the available servers are based in China. You can check the provider's location directly on the model page before selecting one.

WHY THIS RELEASE CHANGES EVERYTHING

Here’s why Qwen3 Coder is more than just another AI model:

- Breaks the barrier: Offers performance comparable to Claude 4, but freely accessible.

- Democratizes innovation: Empowers developers worldwide to work with frontier-level AI without enterprise budgets.

- Applies pressure on Big Tech: Western firms may face growing criticism for paywalls and secrecy.

- Raises ethical concerns: Promotes discussion on whether government-controlled models can ever be truly “open.”

This model isn’t just a technical release it’s a political and economic statement.

TECH SPECS AT A GLANCE

| Feature | Details |

|---|---|

| Model Name | Qwen3-Coder |

| Architecture | Mixture of Experts (MoE) |

| Total Parameters | 480B (with 35B active) |

| License | Apache 2.0 license |

| Storage Size | Approx. 200–230 GB (full model) |

| Performance | Competitive with Claude 4 on code benchmarks |

| Availability | Full weights and tools released publicly |

FINAL THOUGHTS: A WIN WITH A CAVEAT

Qwen3 Coder is an undeniable technical achievement. It challenges the dominance of closed Western models and provides a powerful new tool for global developers. But it also forces us to ask harder questions:

- Can a model be open if it avoids certain truths?

- Are we trading transparency for influence?

- Is “open-source” still meaningful when filtered through geopolitical interests?

For now, Qwen3 Coder is both a gift and a warning a reminder that openness in AI must be judged not only by license terms but by the freedom of expression a model truly allows.

I plan to test Qwen3 Coder further and will share my findings on Twitter. You can follow my updates here. More to come as this story unfolds.